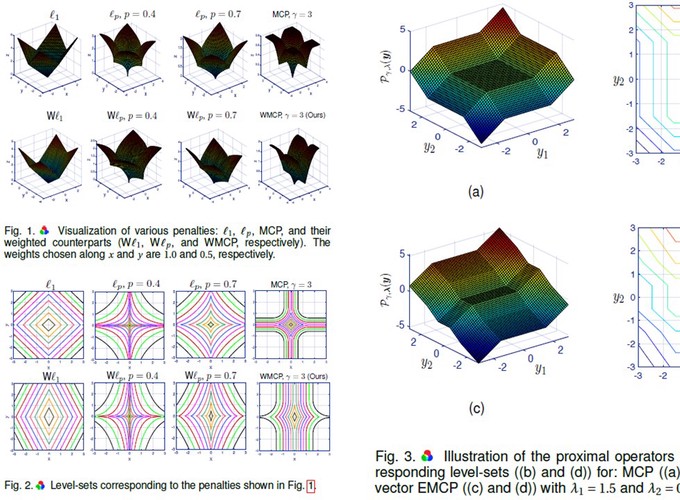

Image from the paper

Image from the paper

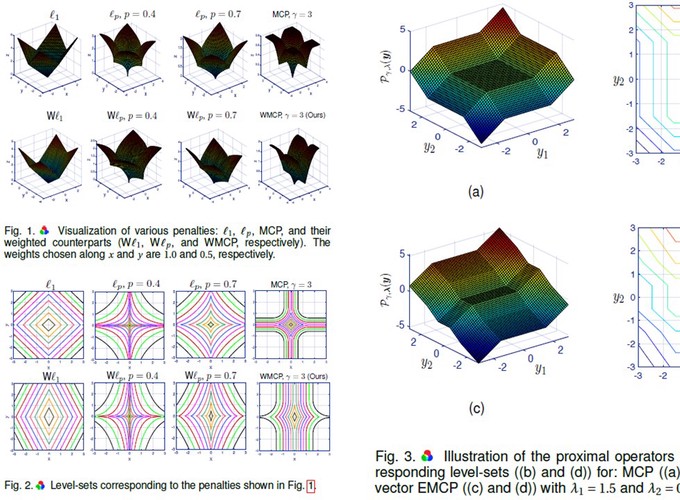

Image from the paper

Image from the paper

Low-rank plus sparse matrix decomposition (LSD) is an important problem in computer vision and machine learning. It has been solved using convex relaxations of the matrix rank and ℓ0 -pseudo-norm, which are the nuclear norm and ℓ1 -norm, respectively. Convex approximations are known to result in biased estimates, to overcome which, nonconvex regularizers such as weighted nuclear-norm minimization and weighted Schatten p -norm minimization have been proposed. However, works employing these regularizers have used heuristic weight-selection strategies. We propose weighted minimax-concave penalty (WMCP) as the nonconvex regularizer and show that it admits an equivalent representation that enables weight adaptation. Similarly, an equivalent representation to the weighted matrix gamma norm (WMGN) enables weight adaptation for the low-rank part. The optimization algorithms are based on the alternating direction method of multipliers technique. We show that the optimization frameworks relying on the two penalties, WMCP and WMGN, coupled with a novel iterative weight update strategy, result in accurate low-rank plus sparse matrix decomposition. The algorithms are also shown to satisfy descent properties and convergence guarantees. On the applications front, we consider the problem of foreground-background separation in video sequences. Simulation experiments and validations on standard datasets, namely, I2R, CDnet 2012, and BMC 2012 show that the proposed techniques outperform the benchmark techniques.

Supplementary material: code and math.

* Both authors have equal contribution.