GenAI: Model Context Protocol (MCP): From Fundamentals to Real‑World Applications

Introduction

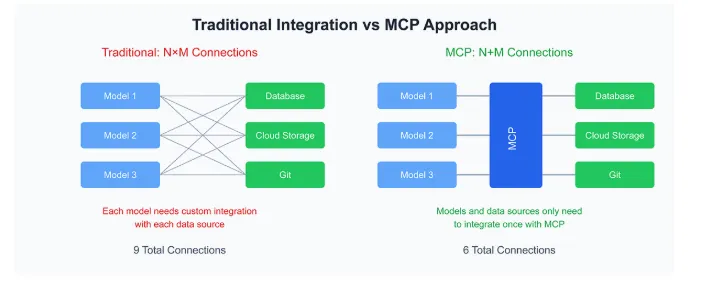

AI models are incredibly powerful, but integrating them with the diverse tools and data sources that organizations use is a major challenge. Traditionally, developers had to build one-off connectors for each data source to provide context to the AI – a fragmented “N×M” integration problem where N models each need to integrate with M systems. This approach doesn’t scale, leading to isolated AI assistants that lack access to up-to-date information. For example, an AI coding assistant might not automatically have access to your code repository or issue tracker without custom integration. Each new tool required another bespoke plugin or API call in the code.

Traditional integration vs. MCP approach. The above illustrates how each AI application (Model 1–3) requires a custom integration to each data source (Database, Cloud Storage, Git) – resulting in a tangle of N×M connections. On the right, MCP introduces a single standardized layer between models and data sources. Each model and each data source integrates once with MCP, reducing complexity to N+M connections, and eliminating redundant adapters (Anthropic’s Model Context Protocol (MCP) for AI Applications and Agents).

To address this challenge, Anthropic introduced the Model Context Protocol (MCP) in late 2024 (The Model Context Protocol (MCP) — A Complete Tutorial). In a nutshell, MCP is a universal, open standard for connecting AI systems to where the data lives – from content repositories and databases to business apps and development tools (Introducing the Model Context Protocol \ Anthropic). Instead of writing custom code for each integration, developers can now plug into MCP’s standardized interface. Think of MCP like a “USB-C for AI” – a single, consistent port that lets AI models connect to any compatible tool or data source (Model Context Protocol (MCP) - Anthropic). This post provides a deep dive into MCP’s theory, how to implement it, and what it enables in practice, from basic integration steps to advanced real-world applications.

Understanding the Model Context Protocol

Definition and Purpose: The Model Context Protocol (MCP) is an open protocol (developed by Anthropic’s team, including lead author Mahesh Murag) designed to standardize how AI assistants (like GPT-based models or Claude) interact with external systems, tools, and data (Anthropic’s Model Context Protocol (MCP) for AI Applications and Agents). MCP provides a secure, two-way connection between an AI application and the outside world of information. By using MCP, an AI model can query data or invoke operations on external services through a unified interface. This dramatically improves the relevance of model responses, since the assistant is no longer “trapped” behind data silos. As Anthropic’s documentation puts it, “MCP provides a standardized way to connect AI models to different data sources and tools,” much like a universal port that works everywhere (Model Context Protocol (MCP) - Anthropic). In practical terms, MCP replaces a patchwork of ad-hoc integrations with a single protocol – so once an AI client supports MCP, it can talk to any MCP-compatible server (and vice versa) without additional custom code.

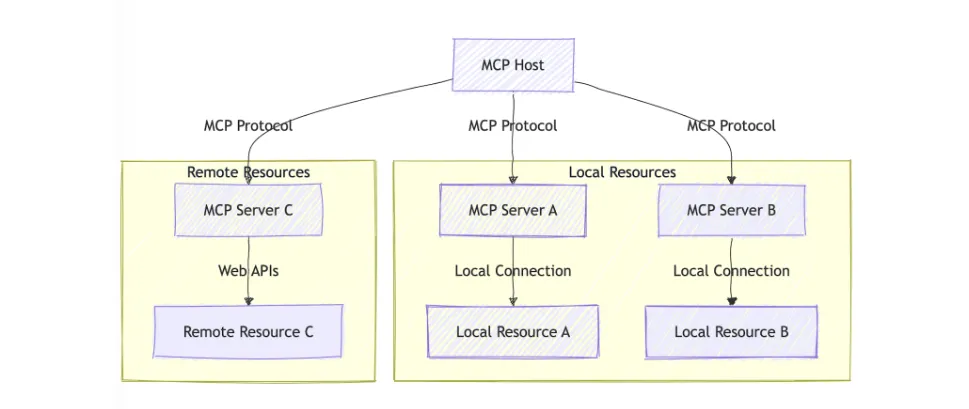

Core Architecture: MCP follows a flexible client–server architecture within a host environment (Core architecture - Model Context Protocol). There are three primary roles:

- MCP Host: an application or platform that hosts the AI model and initiates connections to MCP servers. Examples of hosts include the Claude Desktop app, IDE plugins, or any AI-powered application wanting external data (Introduction - Model Context Protocol). The host is essentially where the user interacts with the AI assistant.

- MCP Client: a client component (often a library in the host application) that manages a 1:1 connection with each MCP server. The client speaks the MCP protocol, forwarding the AI’s requests to the server and returning responses. In many implementations, the distinction between “host” and “client” is subtle – the client library runs inside the host, handling the protocol details (Core architecture - Model Context Protocol).

- MCP Server: a lightweight service that exposes a specific data source or tool via the MCP standard. Each server acts as a bridge to some external system: it could serve files from your computer, retrieve data from a database or API, or perform actions (like posting a message or running a command). Servers provide context (data) and capabilities (operations) to the AI through MCP (Anthropic’s Model Context Protocol (MCP) for AI Applications and Agents). Multiple servers can run concurrently, each handling a different resource or function.

Communication between MCP clients and servers is standardized. All messages use JSON-RPC 2.0, a simple JSON-based request-response protocol (Core architecture - Model Context Protocol). Depending on the environment, MCP supports different transport mechanisms for these JSON-RPC messages:

- Stdio: For local servers, MCP can use standard input/output streams (stdin/stdout) to send and receive JSON messages (Core architecture - Model Context Protocol). This is ideal when the server is a subprocess on the same machine as the host (as is often the case with Claude Desktop’s local servers).

- HTTP + SSE: For remote or networked servers, MCP can run over HTTP. The client sends requests via HTTP POST, and the server pushes asynchronous results or updates back via Server-Sent Events (SSE) (Core architecture - Model Context Protocol). This allows streaming data or long-running operations to update the client in real-time.

Importantly, the interactions are bi-directional and stateful. An AI client can call a tool on the server, and a server can also send notifications or requests back (for example, requesting the AI to perform a sub-task, as we’ll see with the “sampling” feature). All of this is handled within MCP’s message framework. The use of JSON-RPC means the protocol is language-agnostic and well-structured, linking each request to a response clearly (Anthropic’s Model Context Protocol (MCP) for AI Applications and Agents).

Analogy – MCP as “USB-C for AI.” Just as a single USB-C port allows a laptop to connect to many types of devices (display, storage, network) using a common standard, MCP provides a universal connector between AI assistants and external resources. In this diagram, multiple MCP servers (bottom, with icons for Slack, Gmail, Calendar, local files, etc.) plug into an AI assistant via a single MCP client library (client.py). This unified interface works across different AI hosts (Claude, OpenAI, etc.), making tools interchangeable (Model Context Protocol (MCP) - Anthropic).

Once the host’s MCP client and a server establish a connection, they negotiate capabilities – essentially discovering what features the server provides. The server advertises things like “I have these tools available” or “I can provide these data resources,” and the client can then utilize them. Under the hood, this involves a series of JSON-RPC messages for listing tools, listing resources, etc., which the MCP SDKs handle for you. The key is that the AI model can now treat external data/tools in a standardized way, regardless of where they come from.

MCP architecture: a host connected to multiple servers. In this example, an AI MCP host (top box) is running two local MCP servers (A and B) and one remote server (C) simultaneously. All communication uses the MCP protocol. Local resources (right, yellow box) like files or databases are accessed via local MCP servers (A, B) running on the same machine (using stdio or similar local connection), while remote resources (left, yellow box) are accessed via an MCP server (C) that calls external web APIs (Introduction - Model Context Protocol) (Core architecture - Model Context Protocol). From the AI’s perspective, interacting with any of these is uniform – it sends a JSON-formatted request and gets structured data or results back. This design lets the assistant maintain context across multiple tools seamlessly and “carry” its context from one tool to another as needed (Introducing the Model Context Protocol \ Anthropic).

MCP Primitives: The Building Blocks

MCP defines a set of core primitives – standardized types of interactions – that form the building blocks for AI-tool integration. On the server side, there are three main categories of capabilities it can offer, and on the client side there is one special capability for advanced AI behavior. These are: Resources, Tools, Prompts, and Sampling (Specification - Model Context Protocol). Let’s break down each:

-

Resources: Resources are pieces of data or content that a server exposes for the AI to read as context (Resources - Model Context Protocol). Think of resources as read-only or static data endpoints. They can represent things like files, database records, notes, API responses, images, etc. – essentially any information identified by a URI (Resources - Model Context Protocol). For example, a filesystem server might expose a file as

file:///home/user/report.pdf, or a GitHub server might expose an issue description asgithub://issue/12345. The AI (via the client) can request to load these resources when needed. Resources are typically application-controlled, meaning the host or user decides which resources to fetch or include (Resources - Model Context Protocol). (In Claude’s Desktop app, for instance, a user must explicitly attach a file resource before the model can see it (Resources - Model Context Protocol).) This ensures the AI doesn’t grab arbitrary data without permission. Resources provide the raw context that the model can then incorporate into its responses. -

Tools: Tools are actions or functions that the server can perform on behalf of the AI (Tools - Model Context Protocol). They are the execution counterpart to resources’ data. Through tools, an AI assistant can invoke operations – for example: call an API, run a database query, send an email, or execute a calculation. Each tool is defined with a name, a description, and an input schema (so the AI knows what parameters it accepts) (Tools - Model Context Protocol). The AI client can discover available tools via a

tools/listrequest and call them viatools/callwith JSON arguments (Tools - Model Context Protocol). Unlike resources, tools are generally model-controlled – i.e. the AI can decide to invoke a tool autonomously (though usually a human user must grant permission before the action is actually executed) (Tools - Model Context Protocol). Tools are analogous to functions in a programming API. For instance, a weather server might offer aget_forecast(city)tool, or a database server might offer aquery(sql)tool. The result of a tool call is returned to the AI as structured data. Tools can even have side effects (e.g. a “send message” tool that posts to Slack). -

Prompts: Prompts are a mechanism to provide reusable prompt templates or workflows from the server to the host (Prompts - Model Context Protocol). Essentially, a server can define some pre-made conversational prompts (with placeholders for variables) that the client can surface to the user or model. These might represent common tasks or multi-step workflows that the AI can carry out. For example, a server might define a prompt called “analyze-code” which internally has a template like: “Analyze the following code for potential improvements: {code_snippet}”. Or a prompt “daily-standup” that guides the model through generating a summary of yesterday’s work and today’s plan. Prompts can accept parameters and can even chain multiple interactions together (Prompts - Model Context Protocol). They are typically user-controlled – a user might select a prompt template from a menu (or type a slash-command that corresponds to a prompt) to initiate that workflow (Prompts - Model Context Protocol). This ensures the user explicitly triggers these predefined interactions. Prompts help standardize complex or repetitive interactions so that both server and AI know the expected pattern.

-

Sampling: Sampling is a special feature that allows the server to ask the AI client to generate an AI completion (i.e. call the model) mid-task (Sampling - Model Context Protocol). This is a more advanced, agentic primitive. In effect, it lets the server turn around and say, “I need the model to assist me in formulating the next step.” For example, imagine an MCP server that is orchestrating a multi-step research process – it might use Sampling to have the model summarize a document or brainstorm a plan as one step in the process. When a server sends a

sampling/createMessagerequest, it is asking the client to invoke the LLM with a given prompt or partial conversation (Sampling - Model Context Protocol). The client (which has access to the model API) will review the request, possibly adjust it for safety, perform the completion, then return the result back to the server (Sampling - Model Context Protocol). This allows nested LLM calls and more complex agent behaviors, while still keeping the host in control. Sampling requests are always subject to human-in-the-loop control – the host/user must approve them, and the protocol is designed to limit what the server can see (for example, the server might not get to see the full conversation context, only what it specifically asked for) (Sampling - Model Context Protocol) (Specification - Model Context Protocol). This prevents a rogue server from snooping on unrelated parts of your conversation. (Note: As of early 2025, not all MCP clients support sampling yet – for instance, Claude Desktop did not initially support it – but it’s part of the standard for future agentic workflows.)

These four primitives—resources, tools, prompts, and sampling—are the foundational vocabulary of MCP. By combining them, an AI system can be both informed (via resources and prompts) and actionable (via tools and sampling). For example, to answer a complex question, the AI might retrieve some documents as resources, use a prompt template to structure its approach, call a tool to verify some detail, and even use sampling to reflect or chain sub-queries.

Setting Up MCP: A Step-by-Step Guide

Let’s walk through how a developer or researcher can get started with MCP, from setting up the environment to running an integrated AI assistant. We’ll use the example of an open-source MCP setup with Claude (Anthropic’s AI model) as the assistant, since Claude’s desktop app natively supports MCP servers. The process involves three main steps: (1) environment setup, (2) developing an MCP server, and (3) integrating a client (AI app) with the server.

Step 1: Environment Setup – First, ensure you have the necessary SDKs or tools installed and configured. Anthropic has made MCP available via official SDKs in multiple languages (currently Python and TypeScript are fully supported (The Model Context Protocol (MCP) — A Complete Tutorial), with others like Java and C# in development). If you prefer a no-code approach to testing, you can use Claude for Desktop, which includes built-in MCP client support and can run MCP servers locally (Introducing the Model Context Protocol \ Anthropic). For coding, let’s assume Python for now. Install the MCP Python SDK (for example, via pip: pip install "mcp[cli]") which provides the tools to create servers and clients. You’ll also want to have access to any external APIs you plan to use – for instance, if your server will connect to GitHub or Notion, have their API credentials ready (environment variables or config files set, as appropriate). It’s recommended to use a virtual environment or project environment manager (Anthropic’s docs suggest the uv tool for Python for convenience (Model Context Protocol (MCP): A Guide With Demo Project). In summary, the setup step is about preparing your development environment and configuring any keys or credentials for the systems your MCP server will interface with. Optionally, you might configure Claude Desktop or your chosen AI host to enable MCP connections (Claude Desktop automatically scans for local MCP servers if configured, or you can manually add them via a config file or CLI command).

Step 2: Developing an MCP Server – With the environment ready, the next step is to build an MCP server that defines the tools, resources, and prompts you want to expose. You can start from scratch or customize one of the many pre-built servers Anthropic and the community have provided (for example, there are open-source MCP servers for Google Drive, Slack, Git, GitHub, Notion, databases, etc. that you can reuse (Anthropic’s Model Context Protocol (MCP) for AI Applications and Agents)). To illustrate, let’s build a simple custom server in Python. We’ll define two capabilities: a tool and a resource. Using the Python SDK, you can create a server with just a few lines:

from mcp.server.fastmcp import FastMCP

# Create an MCP server instance named "Demo"

mcp = FastMCP("Demo")

# Define a tool (function) that adds two numbers

@mcp.tool()

def add(a: int, b: int) -> int:

"""Add two numbers"""

return a + b

# Define a resource that provides a greeting message

@mcp.resource("greeting://{name}")

def get_greeting(name: str) -> str:

"""Get a personalized greeting"""

return f"Hello, {name}!"

In this snippet (adapted from the official MCP SDK quickstart (GitHub - modelcontextprotocol/python-sdk: The official Python SDK for Model Context Protocol servers and clients) (GitHub - modelcontextprotocol/python-sdk: The official Python SDK for Model Context Protocol servers and clients)), we instantiate a server and use decorators to register a tool and a resource. The add tool can be invoked by the AI to perform addition, and the get_greeting resource can be fetched to get a custom greeting string. In a real scenario, your tools and resources would do something more useful – e.g. fetch data from a web service, query a database, or manipulate a file. You can also define prompt templates here (via @mcp.prompt() in code) if you want to expose any canned prompts to the client, and set up any necessary initialization (for example, connecting to a database or logging in to an API).

Once your server code is ready, you can run the MCP server. If you’re using Claude Desktop, one convenient way is to use the CLI command mcp install server.py (which registers and launches the server so Claude can see it) (GitHub - modelcontextprotocol/python-sdk: The official Python SDK for Model Context Protocol servers and clients). Alternatively, you can start the server in a terminal (e.g. python server.py) which will typically begin listening via stdio or a specified port. The MCP SDK’s CLI provides a mcp dev command to test servers with an interactive inspector as well (GitHub - modelcontextprotocol/python-sdk: The official Python SDK for Model Context Protocol servers and clients).

Step 3: Client Integration – With a server running, the final step is to connect an AI client to it. If you’re using an existing AI app (like Claude Desktop or another chat interface that supports MCP), this might be as simple as enabling the integration. For instance, Claude Desktop automatically discovers local MCP servers and will show their tools/resources in the UI once the server is running. In code, the process involves creating an MCP client and establishing a connection to the server. Under the hood, this means the client opens either a subprocess/stdio channel or an HTTP SSE connection to the server’s endpoint (Core architecture - Model Context Protocol). The details are handled by the SDK if you use one. For example, using the TypeScript SDK, you might do something like:

// TypeScript pseudo-code for connecting to a server

const client = new MCPClient();

const transport = new StdioClientTransport({ command: "node", args: ["my_server.js"] });

await client.connect(transport);

(The above is illustrative – in practice Claude Desktop does this for you when you “install” a server.) Once connected, the client will call list methods to discover the server’s capabilities (e.g. list tools, list prompts). In your AI application’s interface, you should now see the new tools or resources available. As a developer, you can then interact with your AI model and verify that it can indeed call the tools or retrieve the resources from your server.

To summarize the development workflow: define what you want the AI to be able to do or access (in code, via MCP primitives), run the server, and connect your AI client to it. Thanks to MCP’s standardized protocol, an AI client only needs to implement this once – it doesn’t matter if the server is for GitHub, Slack, or a custom database, the connection process is the same. And vice versa, your MCP server could work with any AI client that speaks MCP (Claude Desktop, a custom chatbot, maybe even other AI systems in the future) (Anthropic’s Model Context Protocol (MCP) for AI Applications and Agents). The heavy lifting of messaging, JSON parsing, and tool invocation is handled by the protocol and SDK, allowing you to focus on the logic of your integration rather than boilerplate.

Real-World Example: Automating PR Reviews

To make things more concrete, let’s walk through a real-world use case where MCP ties multiple tools together: Automating a Pull Request (PR) review workflow. In this scenario, we’ll use Claude (via Claude Desktop) as the AI assistant, and connect it to two external systems using MCP servers: GitHub (to fetch PR details and code diffs) and Notion (to store review notes or updates). This setup can automatically review code changes and document them, streamlining the development lifecycle.

The Workflow: Suppose a developer opens a new pull request on GitHub. We want our AI to fetch the PR’s details, analyze the code changes, and then log the findings to a Notion page for the team to review. Using MCP, we can achieve this with a series of tool calls and resource fetches, all orchestrated by the AI assistant. The pipeline looks like this (Model Context Protocol (MCP): A Guide With Demo Project):

- Environment setup: We prepare a server (let’s call it “PR Reviewer”) with access to the GitHub API and Notion API. This involves setting our GitHub token and Notion integration key as environment variables so the server can use them (Model Context Protocol (MCP): A Guide With Demo Project). We also have Claude Desktop ready to connect.

- Server initialization: Start the PR Reviewer MCP server so that it connects with Claude Desktop (Model Context Protocol (MCP): A Guide With Demo Project). The server registers tools for the tasks it can do – for example, a

fetch_prtool to get PR data from GitHub, ananalyze_codetool that perhaps triggers Claude to summarize code (this could also be done just by Claude’s own capabilities), and asave_to_notiontool to create an entry in Notion. It might also expose the PR data (like the diff) as a resource for context. - Fetching PR data: When a new PR is to be reviewed, the AI (Claude) invokes the server’s GitHub tool to retrieve the PR’s details and code diff from GitHub (Model Context Protocol (MCP): A Guide With Demo Project). Under the hood, this tool uses GitHub’s API to get the list of changed files, the diff, the PR title/description, etc., and returns that data to Claude as a resource or message.

- Code analysis: Claude (the AI model) now has the raw materials – it can see the code changes and the PR description. It proceeds to analyze the code, looking for potential bugs, style issues, or areas of improvement. This step might not even require an MCP tool call since it’s the model’s bread and butter (the model can directly perform the analysis given the diff as context). However, if the server offers a prompt or specialized analysis tool, Claude could use that. In our setup, we assume Claude just uses its own capability to generate a summary of the PR and any suggestions (perhaps guided by a prompt template provided by the server for consistency) (Model Context Protocol (MCP): A Guide With Demo Project).

- Notion documentation: After formulating the review comments and summary, the assistant uses another tool (via MCP) to save the results to Notion (Model Context Protocol (MCP): A Guide With Demo Project). The “save to Notion” tool, implemented in our server, takes the summary text and creates a new page or comment in a specified Notion workspace. This could include the PR’s title, the AI’s findings, and any follow-up tasks. The MCP server, in this step, interacts with the Notion API to create the entry, then confirms back to the AI that the operation succeeded.

Throughout this process, the AI assistant (Claude) is orchestrating calls to two different services (GitHub and Notion) via one unified protocol. The developer didn’t have to manually copy-paste the PR diff into Claude or copy the output into Notion – MCP handled the data flow securely between the systems.

Outcome: The end result is an automated, AI-assisted PR review. As soon as a pull request is opened, the team gets a summary in Notion detailing what the PR does, highlights of the code changes, and any potential issues the AI spotted. This can save developers time in initial code review and ensure nothing obvious is missed. According to a tutorial on this implementation, the MCP-based PR reviewer improved code analysis and documentation, supporting “automated PR analysis, easy collaboration, and structured documentation”. Developers can quickly retrieve PR details, get AI-generated insights on the changes, and have those insights stored in Notion for future reference (Model Context Protocol (MCP): A Guide With Demo Project). Essentially, MCP allowed Claude to function like a proactive team assistant: reading code from one platform and writing notes in another, all in a governed, repeatable way. This kind of workflow shows the power of MCP in real applications – it’s not just theory, but something you can set up today using available SDKs and a bit of scripting.

(It’s worth noting that the above example was inspired by a DataCamp tutorial project that walks through building exactly this – connecting Claude to GitHub and Notion via MCP – demonstrating how a few hundred lines of Python can tie together code hosting and documentation tools in a practical AI agent (Model Context Protocol (MCP): A Guide With Demo Project).)

Advanced Use Cases and Industry Adoption

Since its introduction, MCP has been gaining traction in both industry and the open-source community. Early adopters have already started using MCP to enhance their AI systems’ connectivity. For instance, fintech company Block (formerly Square) and Apollo were among the first enterprises to integrate MCP into their internal AI workflows (Introducing the Model Context Protocol \ Anthropic). Block’s CTO even remarked that open standards like MCP are “bridges that connect AI to real-world applications,” enabling agentic systems that take over the “mechanical” burdens of work so humans can focus on creative tasks (Introducing the Model Context Protocol \ Anthropic). This highlights MCP’s role in enterprise AI: enabling virtual assistants that can actually perform tasks (not just chat) by hooking into business data and tools in a secure, standardized way.

On the developer tools front, several platforms have begun building MCP into their products. Replit, a popular online IDE, and Sourcegraph, a code search and navigation tool, are working with MCP to let their AI features pull in richer context about your codebase and development environment (Introducing the Model Context Protocol \ Anthropic). Other dev-oriented adopters include the Zed editor and Codeium (an AI coding assistant), which are using MCP to allow AI agents to, say, open project files, query version control history, or retrieve relevant documentation when assisting a programmer (Introducing the Model Context Protocol \ Anthropic). By standardizing how these tools expose information, an AI helper in an IDE could seamlessly access your code, your issue tracker, and your knowledge base all through one protocol.

One of the most exciting domains for MCP is in powering multi-tool “agentic” workflows. An AI agent is an AI system that can autonomously break down tasks and use tools to accomplish goals. MCP provides an ideal backbone for such agents by giving them a menu of tools and data sources they can mix and match. For example, imagine an agent tasked with researching a topic and compiling a report. Using MCP, the agent could: search the web using a Brave Search server, fetch files or data from a company knowledge base, call a fact-checking API tool to verify information, and then save the final report to a network drive – all in one continuous reasoning loop (Anthropic’s Model Context Protocol (MCP) for AI Applications and Agents). Because MCP servers are self-describing, the agent can even discover new tools at runtime (for instance, if a new server comes online offering a translation service, a smart agent could incorporate it on the fly). This dynamic discovery and usage of tools makes agents highly adaptable. As one blog put it, MCP’s composability allows agents to “dynamically discover and use new tools and data sources, making them self-evolving”, able to leverage new capabilities without manual reprogramming (Anthropic’s Model Context Protocol (MCP) for AI Applications and Agents). We’re already seeing experimental agent frameworks integrate MCP as a way to pull in many services – for instance, chaining web browsing, database queries, and code execution in a single agent loop using MCP for each context access.

In summary, MCP is quickly becoming the “glue” for context-aware AI across different domains. Enterprises are adopting it to connect AI copilots with proprietary data securely, and developer platforms are using it to supercharge AI coding assistants. The open ecosystem means that a connector made by one team (say, a Salesforce CRM MCP server) can be used by another team’s AI agent with minimal effort. This reuse and interoperability is a major shift from the siloed integrations of the past. As the MCP ecosystem grows (with more community-contributed servers and clients), we could see an explosion of AI capabilities – similar to how the growth of public APIs fueled the web – but now standardized for AI consumption.

Security and Best Practices

With great power comes great responsibility: giving AI models access to arbitrary tools and data through MCP raises important security and trust considerations. The MCP protocol itself is designed with a few key safety principles in mind (outlined in the MCP specification) (Specification - Model Context Protocol), but it’s largely up to implementers to enforce policies that keep the system safe, private, and under control. Here are some best practices and built-in guidelines for using MCP securely:

-

Explicit User Consent and Control: Always ensure the user is in the loop for sensitive actions. The MCP spec requires that users must explicitly consent to any data access or operations the AI tries to perform (Specification - Model Context Protocol). In practice, this means the UI should ask the user before, say, opening a file or running a tool that could change something. For example, Claude Desktop will prompt the user to allow a tool invocation. The user should also be able to pause or stop MCP interactions at any time. Design your AI assistant such that it’s clear when it’s accessing external info and the user can say yes or no.

-

Authentication and Access Control: Treat each MCP server as a potentially sensitive gateway. If a server connects to an external API, it should use proper API keys/tokens and store them securely (as you would in any app). The communication between client and server (especially if using HTTP) should be encrypted (HTTPS) and, if applicable, authenticated. One goal of MCP is to handle authentication and usage policies in a consistent way (What is the Model Context Protocol (MCP)? — WorkOS) – for instance, an MCP client could manage API credentials and not send data to servers that aren’t authorized. In practice, ensure your servers only run in environments you trust (e.g. localhost for local data, or secure servers for remote services) and verify the identity of remote servers if needed (currently MCP doesn’t have an auth handshake built-in, so network-level security is the way). Also leverage roots for sandboxing: when configuring an MCP server, you can often specify a root path or scope (for example, only allow the filesystem server to see a specific directory) (Anthropic’s Model Context Protocol (MCP) for AI Applications and Agents). This limits the blast radius of any data access.

-

Data Privacy: Be mindful of what data is being exposed to the model and beyond. Hosts should never send private user data to an MCP server without permission (Specification - Model Context Protocol), and servers should not arbitrarily transmit data elsewhere. If your server reads files or database entries, think about whether that data should be summarized rather than sent in full, or whether it contains sensitive info that the AI (or the open internet, if it’s a web tool) shouldn’t see. Implement access controls – e.g., a server connecting to corporate data should enforce the same permissions as the user running it would have. All data should stay within the user’s approved boundary.

-

Tool Safety and Sandboxing: Because MCP tools can execute code or make changes, they must be treated with caution. As a rule, the AI should not auto-execute tools without user approval (and most MCP clients enforce this by requiring a button click or user command) (Specification - Model Context Protocol). From the developer side, assume that tool descriptions (which come from servers) could be malicious or incorrect – the client shouldn’t blindly trust them beyond using them to show the user (Specification - Model Context Protocol). Running a tool is effectively running code, so sandbox what you can. If your tool opens a subprocess or accesses the OS, do it with least privilege. Containerization or using restricted API tokens can help. Also consider rate-limiting or usage policies: e.g., you might not want an AI agent calling an email-sending tool 1000 times in a minute by accident.

-

LLM Response Filtering: The sampling feature (where a server can ask the model to generate text) adds another vector to monitor. The spec advises that “users must explicitly approve any LLM sampling requests” and have control over the prompt that will be sent (Specification - Model Context Protocol). This prevents a server from tricking the model into revealing something it shouldn’t or from spamming the model with junk. As the host, if you implement sampling, always show the user what will be asked of the model and let them confirm. Also, once the model responds, consider filtering the output if it’s going back to a server. In short, maintain human oversight on any AI <-> server recursive interactions.

-

Audit Logging: For accountability and debugging, it’s a good idea to log all MCP interactions in a secure log. This means recording which tools were invoked, which resources were accessed, and when. In regulated industries, these logs are crucial for compliance (they make auditing much easier by providing a clear record of what the AI accessed or did) (What is the Model Context Protocol (MCP)? — WorkOS). The logs should include timestamps, the identity of the user or process that initiated the action, and success/failure info. MCP’s design facilitates this by structuring everything as JSON messages – you can log those messages or higher-level events. Just be sure not to log sensitive data in plaintext if the logs are stored long-term.

By following these best practices, you can mitigate risks while harnessing MCP’s power. The MCP spec itself doesn’t enforce security (it’s an open protocol), but it strongly recommends implementers to build robust consent flows, clear UX, and permission checks (Specification - Model Context Protocol). In practical terms: keep the user in charge, keep the scope limited, and treat each integration point as a potential security boundary. If done properly, MCP can actually improve security compared to ad-hoc integrations – because you’ll have consistent, well-logged, and user-mediated access across all tools, instead of random scripts running unchecked. Always remember that an AI agent using MCP is only as safe as the guardrails you put around it.

Getting Started with MCP

Ready to dive in and experiment with MCP? A good starting point is the official MCP documentation and community resources:

-

Official Docs and SDKs: The primary resource is the MCP website and spec. The [Model Context Protocol User Guide][13] provides tutorials and explanations of core concepts, and the open-source [MCP Specification][25] details every message and structure in the protocol. You can also find SDKs on GitHub – for example, the [Python SDK][7] and [TypeScript SDK][2] – which come with README guides and examples on building servers/clients. Anthropic has also open-sourced a bunch of example MCP servers (for Google Drive, Slack, GitHub, etc.) in a GitHub repository (Introducing the Model Context Protocol \ Anthropic), which you can study and reuse in your own projects.

-

Community Tutorials and Blogs: Since MCP is new, the community is actively producing learning materials. One great walkthrough is the DataCamp tutorial “MCP: A Guide With Demo Project”, which, as we discussed, builds a practical PR review assistant step-by-step (connecting Claude to GitHub and Notion) (Model Context Protocol (MCP): A Guide With Demo Project). It’s a hands-on way to see how to set up environment variables, implement each tool, and wire everything together. Another recommended read is “The Model Context Protocol (MCP) — A Complete Tutorial” by Dr. Nimrita Koul (The Model Context Protocol (MCP) — A Complete Tutorial), which covers MCP’s background and includes code examples and insights from an AI engineer’s perspective. Additionally, tech consultancies and enthusiasts are blogging about MCP – for example, BlueTick Consultants published an implementation guide breaking down MCP’s architecture and benefits (Anthropic’s Model Context Protocol (MCP) for AI Applications and Agents), and glama.ai’s blog wrote a quickstart with tips on testing MCP in Claude and exploring early use cases (Introducing Model Context Protocol (MCP)). These third-party resources can offer different angles and tips/tricks for working with MCP in the field.

-

Community Forums and Support: Being an open standard, MCP has a growing developer community. You can join the official Model Context Protocol Discord to ask questions or share ideas – the Discord was mentioned as a go-to place for Q&A (Introducing Model Context Protocol (MCP)). There are also GitHub Discussions in the MCP repositories for issue tracking and feature requests. If you prefer more traditional forums, look out for MCP threads on Reddit (in AI or dev communities) and posts on Twitter/X by AI developers discussing their experiments. As MCP is quite new, early adopters are very eager to collaborate and learn from each other’s experiences.

-

Learning by Doing: Perhaps the best way to get started is to try building a simple MCP integration of your own. For instance, if you have an AI app already, see if you can add an MCP server to connect a data source you care about – maybe a local folder of text files, or a weather API, or a database of FAQ answers. Use the SDK in your language of choice, follow a quickstart example (like the calculator we showed earlier), and then iterate. Run your AI client (or Claude Desktop) and test out loading a resource or calling a tool. Starting small will help you get a feel for the request/response cycle and how the AI utilizes the context.

MCP is still evolving (expect improvements in transports, security, and more servers contributed over time), but it’s already usable and useful. The official roadmap and “What’s New” pages will keep you updated on changes (Model Context Protocol (MCP)). By engaging with the community and experimenting, you can be at the forefront of this new layer of the AI tech stack. Whether you’re a developer looking to supercharge an AI app with external data, or a researcher exploring agent architectures, MCP provides the scaffold to do so in a robust and standard way.

Conclusion

The Model Context Protocol represents a significant step forward in making AI assistants more context-aware, versatile, and scalable. By decoupling the AI from the specifics of any one tool or database, MCP enables a world where an assistant can fluidly tap into many sources of knowledge and action. We’ve seen how it standardizes integrations (much like how the Language Server Protocol standardized IDE integrations (The Model Context Protocol (MCP) — A Complete Tutorial)), eliminating redundant work and fostering an ecosystem of reusable connectors. An AI application that’s MCP-compatible today can readily plug into tomorrow’s new MCP server for a fresh tool, and vice versa – this interoperability is a game-changer (Anthropic’s Model Context Protocol (MCP) for AI Applications and Agents).

To recap, MCP’s key benefits include: standardization (a unified way to interface AI with external systems, reducing fragmentation) (Anthropic’s Model Context Protocol (MCP) for AI Applications and Agents), interoperability (any client works with any server, allowing mix-and-match of AI models and tools) (Anthropic’s Model Context Protocol (MCP) for AI Applications and Agents), scalability and maintainability (you can add or swap out integrations without rewriting your AI’s code, and organizations can separate concerns between data teams and AI teams) (Anthropic’s Model Context Protocol (MCP) for AI Applications and Agents). These strengths make MCP a foundational layer for building AI systems that are not just smart in isolation, but deeply connected to the world’s information and services.

For developers and researchers, the call to action is clear: if you’re building an AI-powered application or agent, consider giving MCP a try. Implement a simple server or integrate an existing one, and see how it can expand your AI’s capabilities with minimal effort. As more companies like Block and platforms like Replit adopt MCP, skills in this protocol will be increasingly valuable for creating the next generation of AI applications. Moreover, by contributing to the MCP ecosystem (be it by writing a new server, improving a client SDK, or sharing feedback), you’ll be helping shape an open standard at its early stages.

In essence, MCP matters because it unlocks a future where AI is not a black-box stuck behind a chat interface, but an active participant in our digital ecosystem – one that can fetch data, use tools, and take actions, all under safe and standardized guidelines. We’re moving toward AI that can truly assist in real workflows, and MCP is a big piece of that puzzle. So plug in, explore what MCP can do for your AI projects, and contribute to this exciting journey of connected intelligence!

Workshop Video Highlights: “Building Agents with Model Context Protocol”

To further enrich our understanding, let’s briefly recap key points from Mahesh Murag’s workshop on MCP (from the AI Engineer Summit, Feb 2025). This 2-hour session provides a live deep-dive into MCP’s concepts and an example of building an agent. (Timestamps denote the approximate time in the video (AI Engineer - Building Agents with Model Context Protocol - YouTube).)

-

00:00 – “What is MCP?” – The workshop opens with an overview of the motivation behind MCP. Mahesh explains the context problem (AI systems isolated from data) and introduces MCP as the solution, likening it to giving LLMs “superpowers” they didn’t have before by allowing them to use tools and access live information. He covers the basic architecture (clients, servers, JSON-RPC communication) and draws parallels to the Language Server Protocol to convey how MCP standardizes AI-tool interactions.

-

09:39 – “Building with MCP” – Around this point, the session shifts into a live coding demonstration. Mahesh walks through setting up a simple MCP server from scratch. For example, he demonstrates creating a server that connects to a Weather API: defining a

get_weathertool, running the server, and then connecting an AI client to ask “What’s the weather in San Francisco?”. We see Claude (as the AI) call the tool via MCP and return an answer with real data. This segment highlights the developer experience: using the MCP SDK, handling schema definitions for tool inputs/outputs, and testing the integration. Attendees get to see how quickly an AI’s capabilities can be extended (in minutes) using MCP. -

26:25 – “MCP & Agents” – In the next part of the workshop, the focus shifts to agentic behavior enabled by MCP. Mahesh showcases an example of an AI agent that can tackle a more complex task by using multiple tools in sequence. One scenario discussed is a travel planning agent: it uses an MCP server to search flights, another to fetch weather forecasts, and another to post a summary itinerary to an app. The concept of the AI autonomously deciding which tools to use and when (based on the conversation goals) is explored. Here Mahesh also introduces the sampling mechanism – how an MCP server can prompt the AI to think or compute something mid-process (essential for multi-step planning). The workshop emphasizes how MCP provides the plumbing for agents to coordinate tools, and Mahesh shares some best practices (like approving tool usage and having fallback plans if a tool fails).

-

1:13:15 – “What’s next for MCP?” – Finally, Mahesh discusses the future roadmap and takes questions. He mentions upcoming improvements such as more robust HTTP support (to make connecting over networks easier), a possible registry of MCP servers to discover available integrations globally (Model Context Protocol (MCP)), and ideas like a

.well-known/mcp.jsonconvention for websites to advertise AI-accessible APIs (Model Context Protocol (MCP)). There’s excitement about community contributions – several attendees had built MCP servers (one question came from someone who made a Gmail MCP server, asking about security). Mahesh addresses security, reiterating the importance of user consent and sandboxing (echoing what we covered above). He also hints at collaborations with other AI frameworks and how MCP could become a layer that different AI vendors support, ensuring that tool integrations become model-agnostic. The Q&A touches on real-world deployment: how to run MCP servers in cloud environments, how to scale them, and monitoring considerations. In closing, Mahesh encourages developers to experiment with MCP and join the community, as “we’ve only scratched the surface of what’s possible when AI can reliably interact with other systems.”

This workshop effectively ties together the concepts we’ve discussed in this blog with hands-on examples and forward-looking discussion. If you’re interested, it’s worth watching to see MCP in action and to hear the thought process of its creators. The timestamps above can help you navigate to the sections most relevant to your interests. Happy building with MCP!

Enjoy Reading This Article?

Here are some more articles you might like to read next: